It took me a while to figure out how to generate reports in SpecFlow. After all, now we have all these lovely Specification written and the code written to satisfy our requirements, wouldn’t it be nice to generate a report for Project Managers/Customers/Whoever so they can see progress on certain areas of functionality.

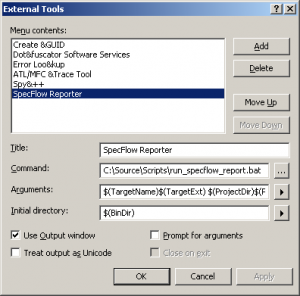

There is a command line tool, specflow.exe that can generate two types of reports. The documentation could do with some work, as it is not clear what some of the parameters refer to. I had to look at the source code to find what some parameters meant and what the defaults were. (I am planning on submitting a patch to the team with improved help docs!)

It is useful to put the path for specflow into your path to allow. By default, the installation directoy is C:\Program Files\TechTalk\SpecFlow

Test Execution Report

This is a high level summary showing how many scenarios have passed or failed, as well as timings of the steps. This report uses the NUnit Results XML file as it’s input which wasn’t obvious.

The syntax for this command is:

specflow nunitexecutionreport VisualStudioProjectFile /xmlTestResult:PathToNunitTestResultXMLFile

In my case, I execute:

specflow nunitexecutionreport Specs.csproj /xmlTestResult:bin\debug\TestResult.xml

Step Definition Report

This seems to be a way of seeing what steps you have written, and how many times they are called. We were able to identify a number of steps that had become obsolete during the refactoring of some specs.

The syntax for this command is:

specflow stepdefinitionreport VisualStudioProjectFile

In my case, I execute:

specflow StepDefinitionReport Specs.csproj